Generation of the Extended Attention Mask, by multiplying a classic... | Download Scientific Diagram

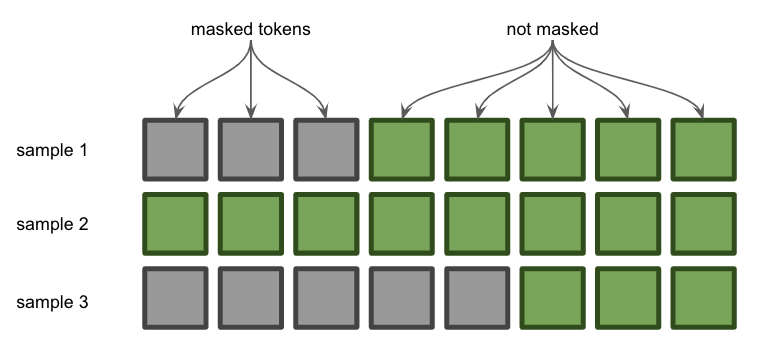

Illustration of the three types of attention masks for a hypothetical... | Download Scientific Diagram

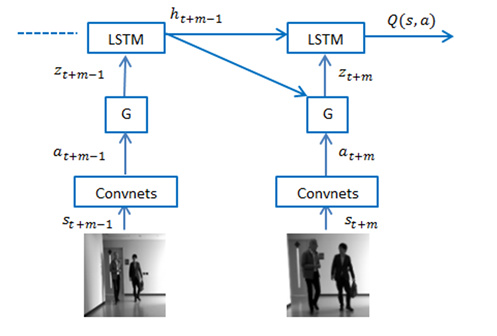

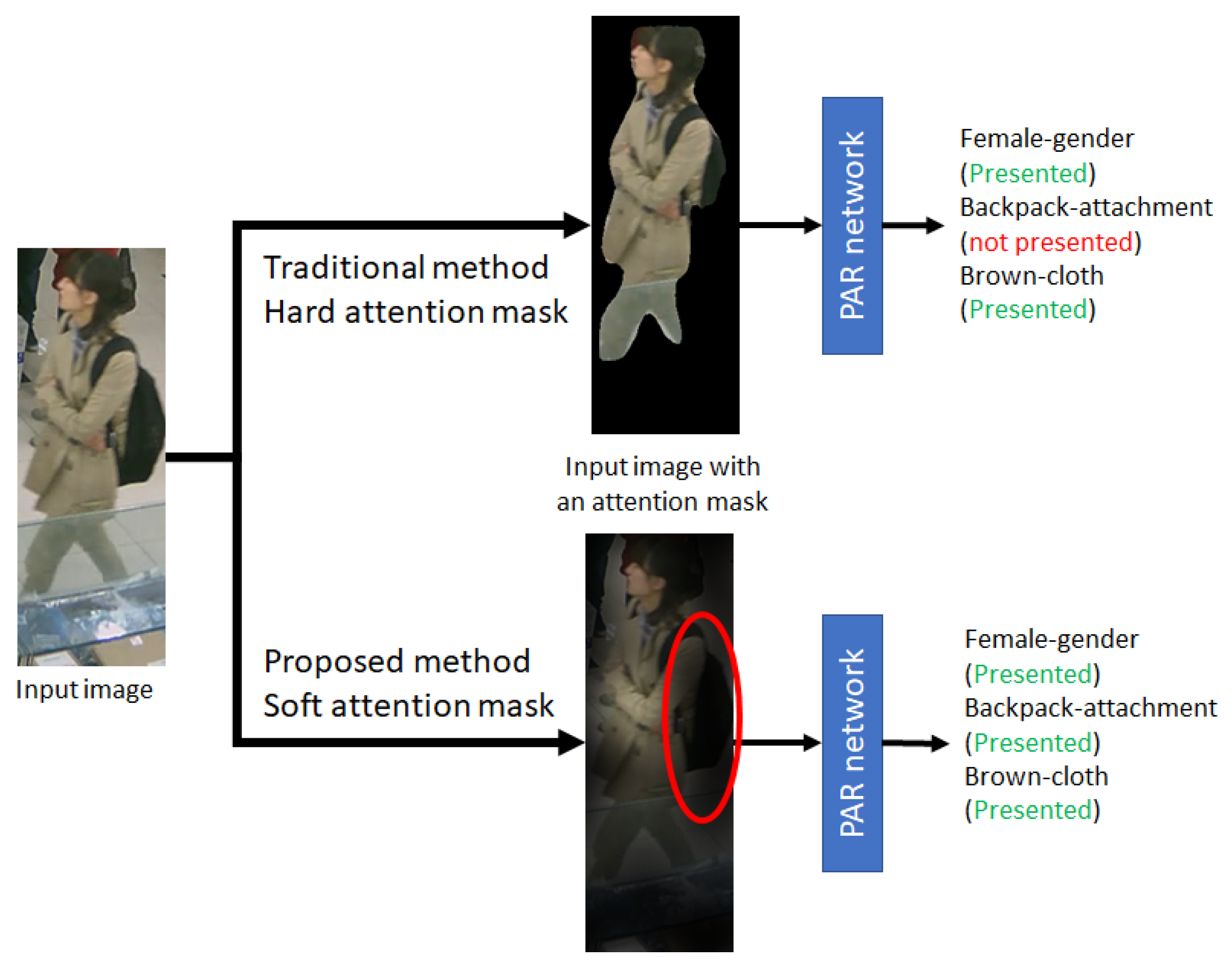

J. Imaging | Free Full-Text | Skeleton-Based Attention Mask for Pedestrian Attribute Recognition Network

a The attention mask generated by the network without attention unit. b... | Download Scientific Diagram

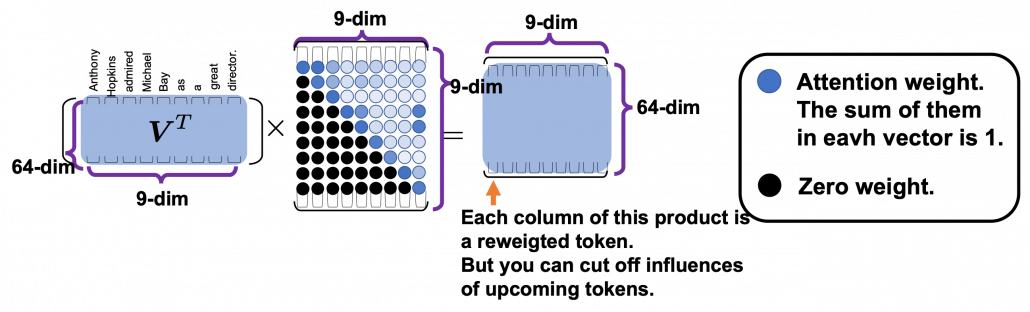

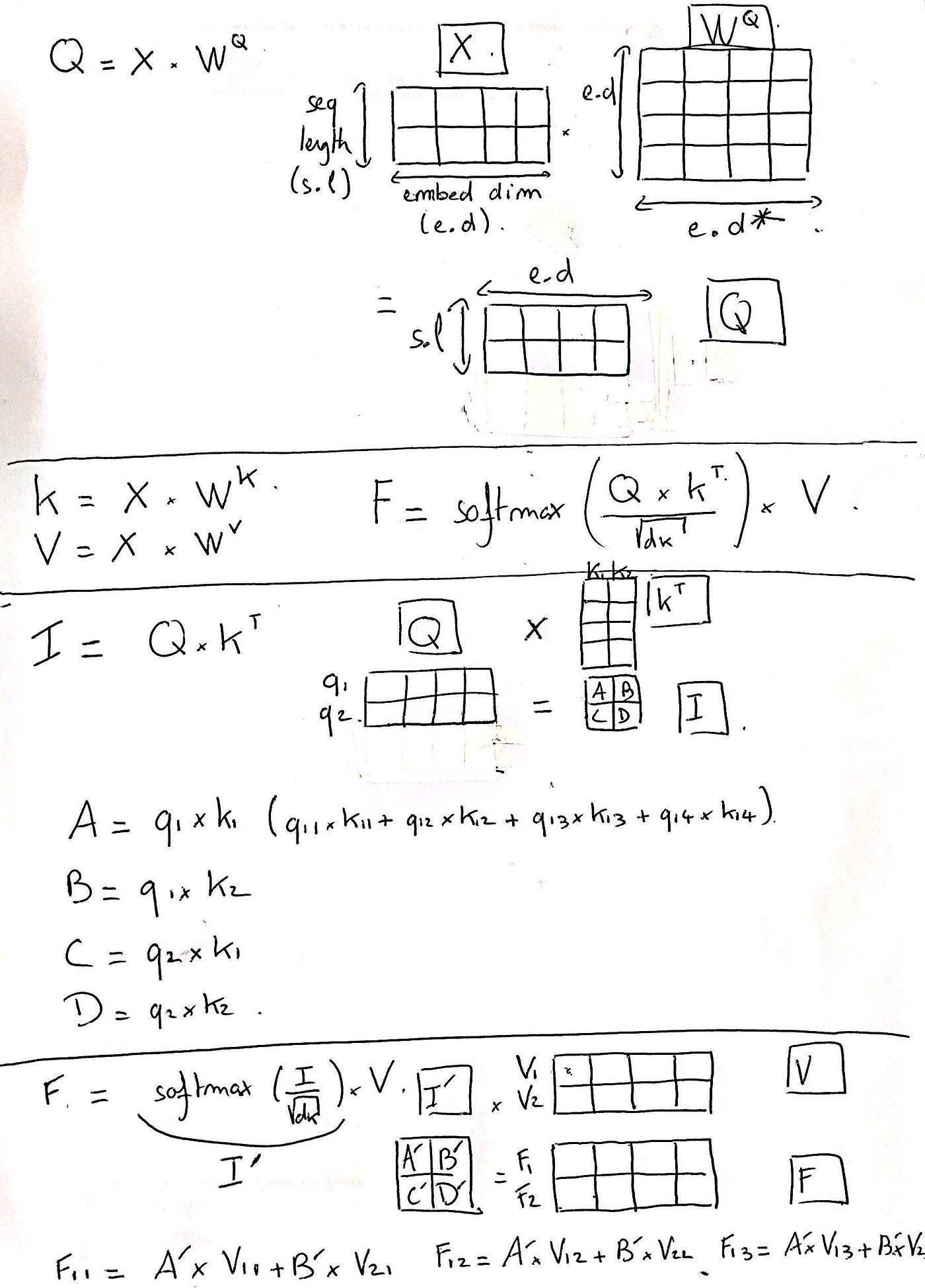

Transformers Explained Visually (Part 3): Multi-head Attention, deep dive | by Ketan Doshi | Towards Data Science

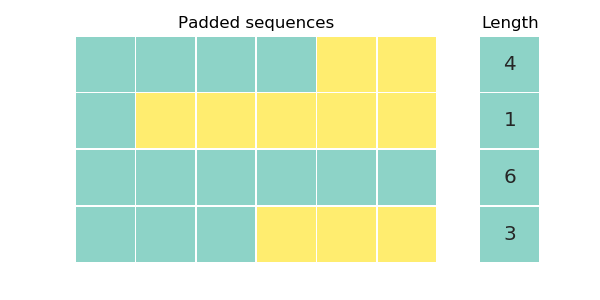

Positional encoding, residual connections, padding masks: covering the rest of Transformer components - Data Science Blog

How to implement seq2seq attention mask conviniently? · Issue #9366 · huggingface/transformers · GitHub

![D] Causal attention masking in GPT-like models : r/MachineLearning D] Causal attention masking in GPT-like models : r/MachineLearning](https://preview.redd.it/d-causal-attention-masking-in-gpt-like-models-v0-ygipbem3cqv91.png?width=817&format=png&auto=webp&s=67002a5b7c32166020a325feaa4a8abaa86dc7cc)

![PDF] Masked-attention Mask Transformer for Universal Image Segmentation | Semantic Scholar PDF] Masked-attention Mask Transformer for Universal Image Segmentation | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/658a017302d29e4acf4ca789cb5d9f27983717ff/3-Figure2-1.png)